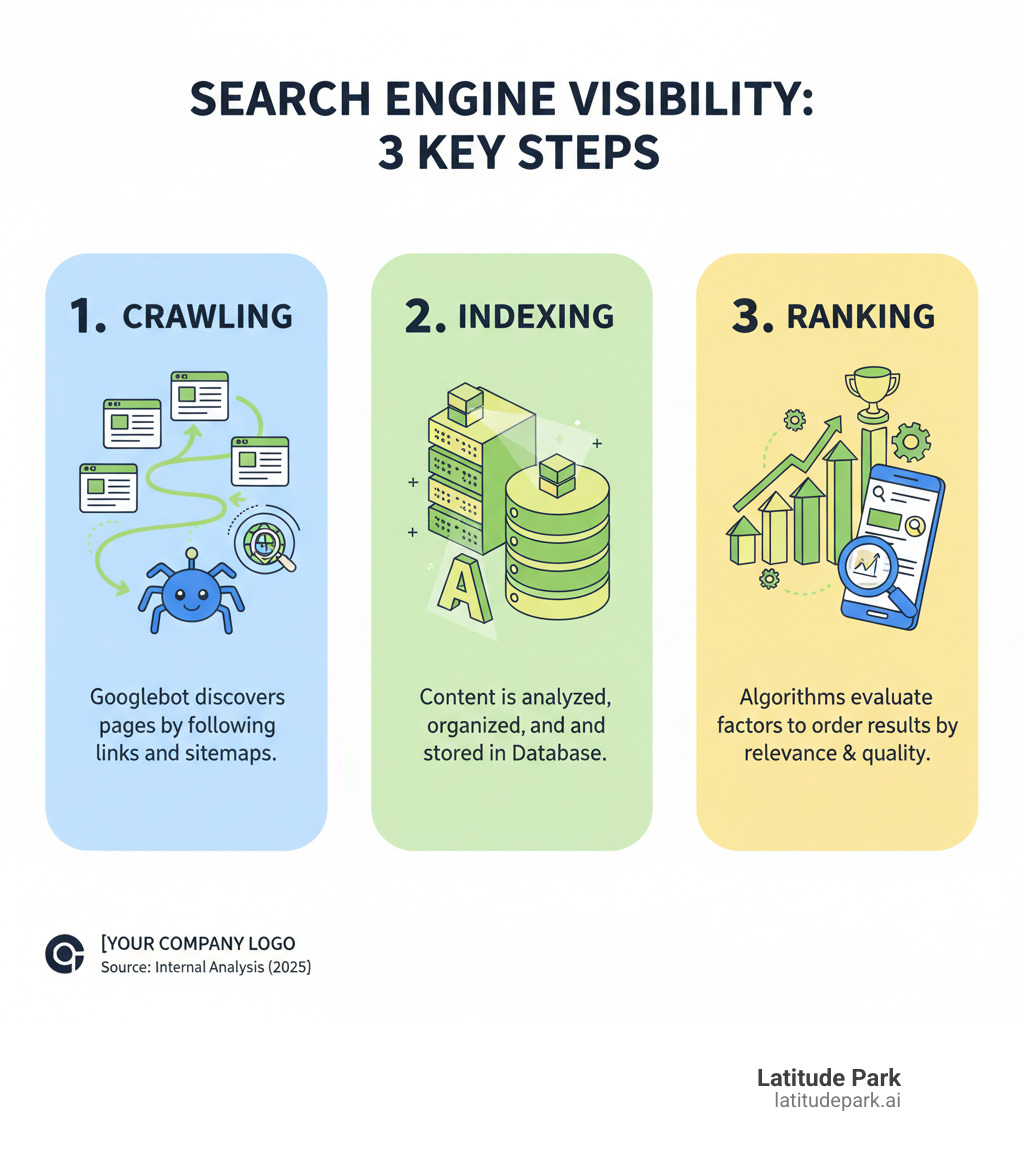

Understanding How Search Engines Work

Crawling, indexing, and ranking are the three core processes that determine if your website appears in search results. Here’s a quick breakdown:

- Crawling: Search engines use automated programs (crawlers or spiders) to find and download your web pages by following links.

- Indexing: The search engine analyzes your content, stores it in a massive database, and decides which version of similar pages to keep.

- Ranking: When a user searches, algorithms evaluate hundreds of factors to decide which pages appear first.

Think of it like a library: crawling is finding books, indexing is cataloging them, and ranking is recommending the best ones for each question.

Why This is Crucial for Your Franchise

For a multi-location franchise, being found online is everything. If search engines can’t crawl your location pages, they won’t be indexed. If they aren’t indexed, they can’t rank. And if they don’t rank, potential customers won’t find you.

With over 90% of web searches happening on Google, mastering these stages is essential for driving valuable organic traffic. This traffic comes from users actively searching for what you offer, like “franchise marketing near me.”

Google’s algorithms change daily, so a proactive SEO strategy is necessary to keep your content findable and highly ranked. In this guide, we’ll break down each stage and show you exactly what to do to improve your visibility.

Crawling vs. Indexing vs. Ranking: What’s the Difference?

Crawling, indexing, and ranking are three distinct stages a web page must pass through to attract customers from search. If any stage fails, your visibility collapses. Let’s clarify the role of each.

-

Crawling: The Findy Phase. This is how search engines find your content. Automated bots, like Googlebot, explore the web by following links from one page to another. They fetch your content and send it to Google’s servers. Successful crawling is the prerequisite for everything that follows.

-

Indexing: The Filing Phase. After finding your content, Google must understand and store it. The search engine analyzes text, images, and other media, then stores this information in a massive database called the index. At this point, your page becomes eligible to appear in search results. This is also when Google handles duplicate content, which is critical for franchises with similar location pages.

-

Ranking: The Ordering Phase. When a user enters a search query, algorithms decide which pages to show first. They evaluate hundreds of factors to determine which indexed pages best answer the user’s question, assigning a position in the search engine results page (SERP). A high ranking can dramatically impact your business.

For franchises, these distinctions are vital. You might have 50 location pages that are crawlable and indexable, but if they aren’t optimized for ranking, you’re losing business. Worse, technical issues could prevent pages from being crawled at all, making entire locations invisible online.

Stage 1: Crawling – The Findy Phase

Before Google can rank your franchise locations, it must first know they exist. This findy process is called crawling.

Crawling is when search engines send out automated bots (like Googlebot) to find new and updated content. These bots explore the web by following links from page to page, building a map of the internet.

How to Help Crawlers Find Your Content

We can actively guide crawlers to our content:

- XML Sitemaps: A sitemap is a file that lists all your important URLs, acting as a roadmap for search engines. For franchises with many location pages, a sitemap ensures nothing is missed. Submit it via Google Search Console.

- Robots.txt: This file tells crawlers which parts of your site to avoid, such as admin pages or duplicate content. It helps focus Google’s attention on what matters.

- Crawl Budget: Every site has a crawl budget—the number of pages Googlebot will crawl. It’s based on your site’s health and authority. Optimizing this budget ensures your most valuable pages are crawled regularly.

- JavaScript Rendering: Googlebot renders pages to see content loaded with JavaScript. If your site relies heavily on JavaScript, ensure it renders correctly so content is visible to Google.

Common Crawling Errors

Several issues can block crawlers and harm your visibility:

- Server Errors (5xx): These indicate a problem with your server, wasting crawl budget and preventing pages from being found.

- 404 “Not Found” Errors: These happen when a page doesn’t exist. “Soft 404s” are worse, as they report a page is “OK” (200 status) but show a “not found” message, confusing search engines.

- Robots.txt Blocks: A misconfigured robots.txt file can accidentally block important pages or entire sections of your site.

- Redirect Chains: Multiple redirects (A -> B -> C) waste crawl budget. Aim for a single redirect whenever possible.

- Infinite Spaces: Poorly designed calendars or filters can create endless loops of URLs that trap crawlers and exhaust your crawl budget.

- Login-Protected Content: Content behind a login wall is invisible to Googlebot.

Stage 2: Indexing – Building the Search Library

Once a page is crawled, it must be indexed. If crawling is finding the book, indexing is reading it, understanding it, and adding it to a massive, searchable library.

Indexing is where Google analyzes a page’s content and stores that information in the Google index. Not every crawled page makes it into the index; Google is selective, prioritizing helpful and reliable content.

Indexing Suitability Criteria

For a page to be indexed, it must meet certain criteria:

- Content Quality: Pages with thin, unoriginal, or unhelpful content are often ignored. Google wants to index valuable content that serves a purpose for the user. Refer to Google’s helpful content guide for more.

- Mobile-Friendliness: With mobile-first indexing, Google primarily uses the mobile version of your site. A poor mobile experience can prevent indexing.

- Page Metadata: Title tags and meta descriptions provide context, while header tags (H1, H2) structure your content, helping Google understand its hierarchy.

- Canonicalization: When multiple URLs have similar content (common for franchises), Google selects one “canonical” URL to index. This prevents ranking signals from being diluted.

Common Issues That Prevent Indexing

Even after a successful crawl, a page might not be indexed due to:

- Low-Quality Content: This is the most common reason. Google won’t index pages it deems unworthy. Ensure your franchise location pages offer unique, valuable information.

- “Noindex” Meta Tags: An accidental

noindextag tells Google to skip a page. Always double-check this on important pages. - Website Design Issues: Complex JavaScript or content hidden in non-text formats can prevent Google from fully understanding and indexing a page.

- Canonicalization Errors: Incorrect canonical tags can confuse Google, leading it to index the wrong page or ignore content altogether.

- Accidental Robots.txt Blocks: While a robots.txt file controls crawling, if a page is blocked from being crawled, it can never be indexed.

Use the URL Inspection tool in Google Search Console to diagnose indexing issues for specific pages.

Stage 3: Ranking – Delivering the Best Answers

After being crawled and indexed, your pages are eligible to appear in search results. The final stage, ranking, determines their position. This is where Google’s algorithms order pages to provide the best answer to a user’s query.

When you search, algorithms sift through the index and evaluate hundreds of factors to present the most relevant, high-quality results. Understanding these factors allows us to optimize strategically and earn top visibility.

Key Factors That Influence Page Rank

While Google’s exact formula is secret, many key ranking signals are well-known:

- Content Quality and Relevance: Your content must be comprehensive, accurate, and directly address user intent. Google rewards content created for people, not just for search engines.

- Backlinks: Links from other reputable websites act as votes of confidence, signaling that your content is authoritative. Quality matters more than quantity.

- Internal Links: A good internal linking structure helps distribute authority throughout your site and guides users and crawlers to important content.

- User Engagement Signals: Metrics like click-through rate and how long users stay on your page can indicate content quality to Google. If users are satisfied, it’s a positive signal.

- Page Speed and Core Web Vitals: Fast-loading pages with a good user experience (interactivity, visual stability) rank better.

- Mobile-First Indexing: Google primarily uses the mobile version of your site for ranking, so a strong mobile experience is crucial.

- Local SEO Factors: For franchises, local signals are key. These include relevance (how well your business matches the query), distance (proximity to the searcher), and prominence (online reputation, reviews, and citations).

The Role of AI and Algorithms in Ranking

Modern search is powered by sophisticated AI and algorithms:

- RankBrain: A machine-learning system that helps Google understand the intent behind ambiguous queries.

- NavBoost: A system that adjusts results based on user interactions, learning from which results users prefer.

- Algorithm Updates: Google makes daily tweaks and periodic “core updates” to improve search quality. These often focus on rewarding expertise, authoritativeness, and trustworthiness (E-E-A-T).

- Personalization: Search results are influenced by user location, device, and language, creating a customized experience.

Understanding crawling indexing and ranking as interconnected stages is key. You can’t rank without being indexed, and you can’t be indexed without being crawled.

How to Master Crawling, Indexing, and Ranking for SEO Success

Understanding the theory is one thing; putting it into practice is another. A holistic SEO strategy combines technical SEO (accessibility), on-page SEO (content optimization), and off-page SEO (authority building) to address every stage of the search process.

For franchises, this is critical. Each location page must be crawlable, indexable, and optimized to rank for local searches. Let’s break down the essential actions.

Optimizing for Crawling and Indexing

First, make it easy for search engines to find and understand your content.

- XML Sitemaps: Create an accurate sitemap listing only the URLs you want indexed and submit it via Google Search Console. Learn about sitemaps.

- Robots.txt File: Configure this file to allow access to important pages while blocking crawlers from private areas. Audit it regularly to prevent accidental blocks.

- Clean URL Structure: Use simple, human-readable URLs (e.g.,

/locations/chicago/) that help users and search engines understand the page’s content. - Internal Linking: Create clear pathways between pages to help crawlers find content and to distribute authority across your site.

- Canonical Tags: Use canonical tags to tell search engines which version of a page is the “real” one, consolidating ranking signals for similar pages.

- Schema Markup: Add schema markup to provide explicit clues about your content, such as business address and hours, to enable rich snippets in search results.

A Website Owner’s Guide to Improving Ranking

Once your technical foundation is solid, focus on content and user experience.

- High-Quality Content: Write for people, not search engines. Create original, valuable content that answers user questions and establishes your expertise.

- Title Tags: Write unique, compelling titles under 60 characters. They are a primary ranking signal and the first thing users see in search results.

- Meta Descriptions: While not a direct ranking factor, well-written meta descriptions (around 150-160 characters) entice users to click.

- Header Tags (H1, H2): Structure your content with headers to improve readability and signal the topic hierarchy to search engines.

- Image Alt Text: Provide descriptive alt text for all images to improve accessibility and give search engines context.

- Mobile Optimization: Ensure your site is responsive and loads quickly on mobile devices. Use Google’s Mobile-Friendly Test to check your pages.

Avoiding Penalties and Ensuring Compliance

Violating Google’s quality guidelines can lead to devastating penalties. Avoid black-hat SEO tactics like keyword stuffing, buying links, or cloaking. These shortcuts promise quick results but risk long-term damage.

Penalties can be manual (from a human reviewer) or algorithmic (from an algorithm update). If you’re penalized, you’ll see a sudden drop in traffic. The best defense is to focus on creating a valuable, user-friendly experience that follows Google’s spam policies. Sustainable SEO comes from earning your rank, not trying to trick the system.

Frequently Asked Questions about Search Engine Mechanics

Crawling, indexing, and ranking can seem complex. Here are answers to some of the most common questions we hear.

How long does it take for a new page to be crawled, indexed, and ranked?

There’s no fixed timeline, as it depends on your site’s authority and health. Crawling can take days or weeks for a new site but happens constantly for major news sites. Indexing typically follows within a few days to a few weeks after a successful crawl. Ranking is a continuous process; your page’s position will fluctuate as Google reevaluates it against competitors and user signals. To speed things up, ensure new pages are linked from authoritative parts of your site and submit an XML sitemap via Google Search Console.

Can I pay to have my site crawled or ranked higher?

No. You cannot pay Google to crawl your site more often or to improve your organic rankings. Organic search (SEO) is about earning rank through quality and relevance. Paid search (PPC) involves buying ads that appear separately from organic results. While ads provide immediate visibility, they have no impact on your organic SEO performance or your site’s crawl budget.

What is the best way to check if my pages are indexed?

There are two primary methods:

-

Google Search Console (GSC): This is the most reliable tool. The “Index Coverage” report shows the status of all your pages. For individual URLs, the URL Inspection Tool provides detailed information on crawl and index status, and allows you to request re-indexing. Sign up for Search Console.

-

“site:” Search Operator: For a quick check, type

site:yourdomain.com/your-page-urlinto Google. If your page appears, it’s indexed. This method is less precise than GSC but is useful for a fast spot-check.

Conclusion: From Invisible to Unmissable

The journey from a new page to a top search result is built on crawling, indexing, and ranking. Understanding these three pillars is the foundation for connecting with customers when they need you most.

- Crawling is how search engines find your pages.

- Indexing is how they analyze and store your content.

- Ranking is how they decide which page best answers a query.

For franchise locations, mastering this process is the difference between a customer finding your nearest branch or a competitor’s. As Google’s algorithms evolve daily, a continuous SEO strategy is essential. You must regularly check that pages are crawlable, content meets quality standards, and rankings remain strong.

By taking deliberate action—fixing technical issues, creating valuable content, and building authority—you can transform your online visibility. Each improvement builds on the last, making your brand progressively harder to miss.

At Latitude Park, we specialize in helping multi-location franchises steer these complexities. We manage the technical details of SEO so you can focus on running your business, ensuring customers can find you across all your territories.